Introduction

GitOps is an operating model for Kubernetes and other cloud-native technologies that provides a set of best practices that unifies deployment, management, and monitoring for containerized clusters and applications. While terraform is an amazing tool enabling DevOps to operate infrastructure by coding, which is known as IaC(Infrastructure as Code). What if we combine those two together to make provision of infrastructure is like creating a new class in Java programing? That is what we are going to find out in this article.

Prerequisite

We choose some typical environment options such as AWS as cloud providers and CircieCI as CICD service providers to demonstrate how. However it’s definitely possible to get the same result if you have other options. Before we start, here’s what to prepare:

- an AWS account with aws_access_key_id and aws_secret_access_key

- CircleCI account and a git repository integrated with it.

- Terraform and aws command line tool installed on your local workstation for testing.

Architecture

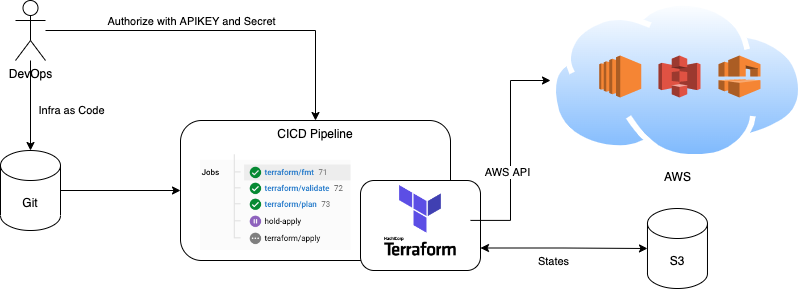

The diagram bellow shows how the operation works generally.

We push our terraform code into a git repository with a CircleCI pipeline project set up to watch our commits. When new commit triggered the CircleCI Pipeline and some conditions we setup before are meet, a special terraform docker container will be launched in a dedicated environment called Orb. With API secrets we saved before on CirecleCi, terraform will be able to retrieve or generate a states file stored on AWS S3. The states file is used to plan operations on infrastructures depending on what states they are in currently and what we are expecting to get defined in our terraform code. When the plan is approved by DevOps manually, terraform will call AWS API to perform operations. Sounds like a good plan? Let us see key actions we have to take to work this through.

Key Steps

First of all, we need to file a terraform project to declare what resources or infrastructures we want. Terraform uses a set of language called HCL to define infrastructures. Here’s a demo bellow but we won’t dive into details this time because it might be a whole new topic. Visit Terraform official documents for more instructions.

| |

Before submitting this project to git, you may try it with local terraform. But first you have to set up you aws command line tool with aws_access_key_id and aws_secret_access_key

| |

Then try terraform out to see if this code works

| |

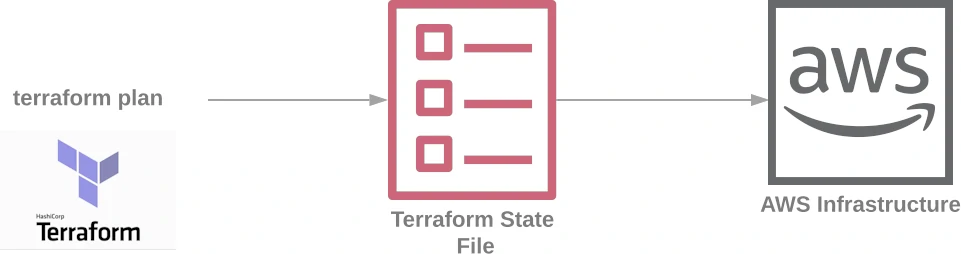

Hopefully, your code will work as expected to get VPC, EC2 etc ready for you. If not, make some changes on your code and just run terraform applyagain without worries about what has be left by interrupted actions previously. Since Terraform saves a local state file by default, it will know which step to begin with.

However it’s not a good choice to save state file in git repository as code because some sensitive information such as usernames and passwords, certificates will be saved in plaintext in state file. Also we can’t just left the state file locally since it’s a important keep the file in a safe place where everyone in the team can also access or we may lost the state of infrastructures and terrafrom apply may end with wrong results.

Terrafrom supports a few remote service to save its state file, which is known as backend, see terraform backend configure docs for more information. We use AWS S3 in this demo and here is how to configure it.

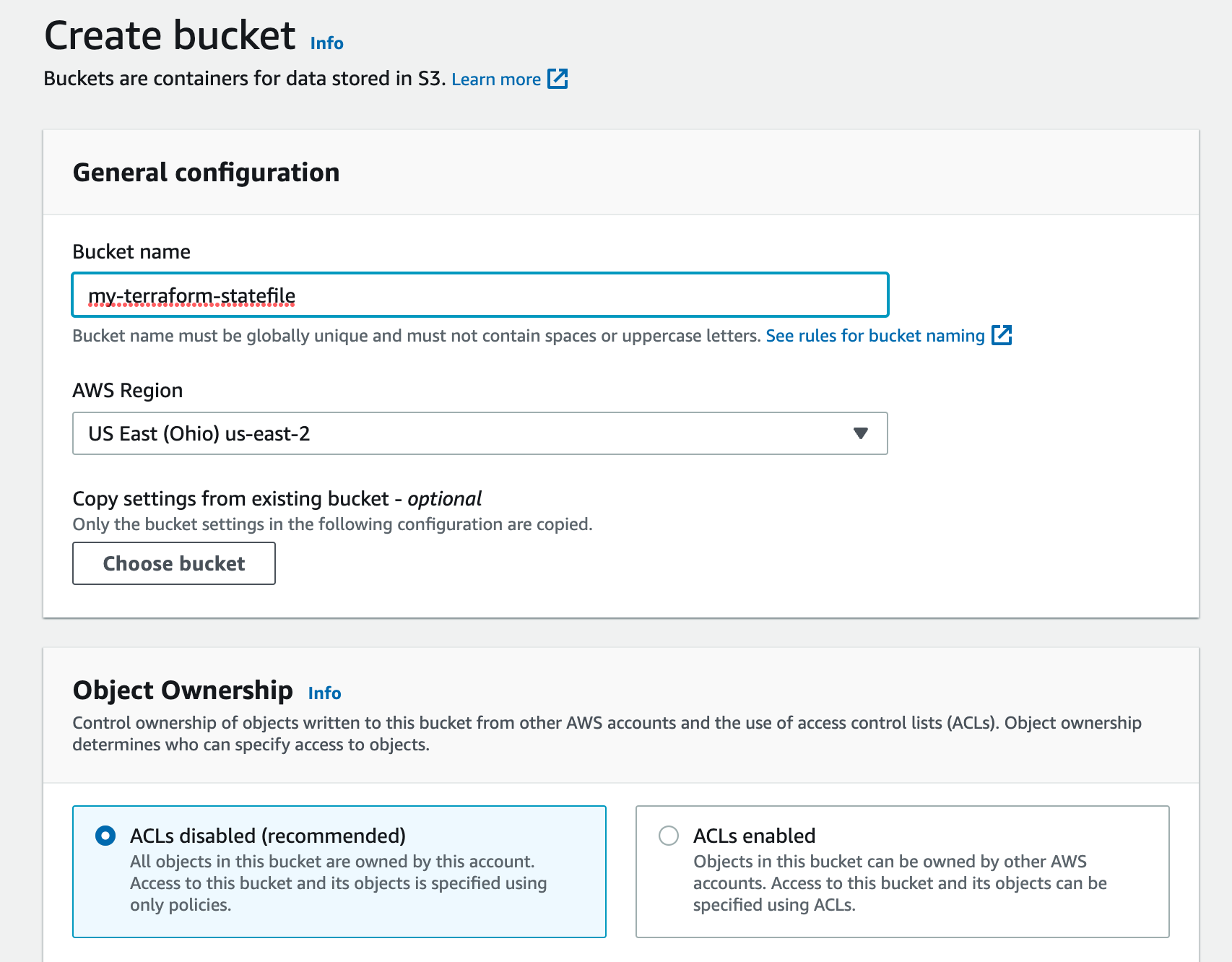

Create a S3 bucket in you AWS S3 service

Add backend config in you terraform code block

| |

Now you can run you terraform code anywhere with your AWS credentials.

With remote backend configured, we are able to run terraform on CICD services providers such as CircelCI, TravisCI, Github and so on. We choose CircleCI as demo since it provides us with a terraform Orb that has everything ready to run. Or we can also make our own Docker images that includes terraform, aws command line client and aws-iam-authenticator. Here is an demo pipeline config yaml running terrafrom lifecycle.

| |

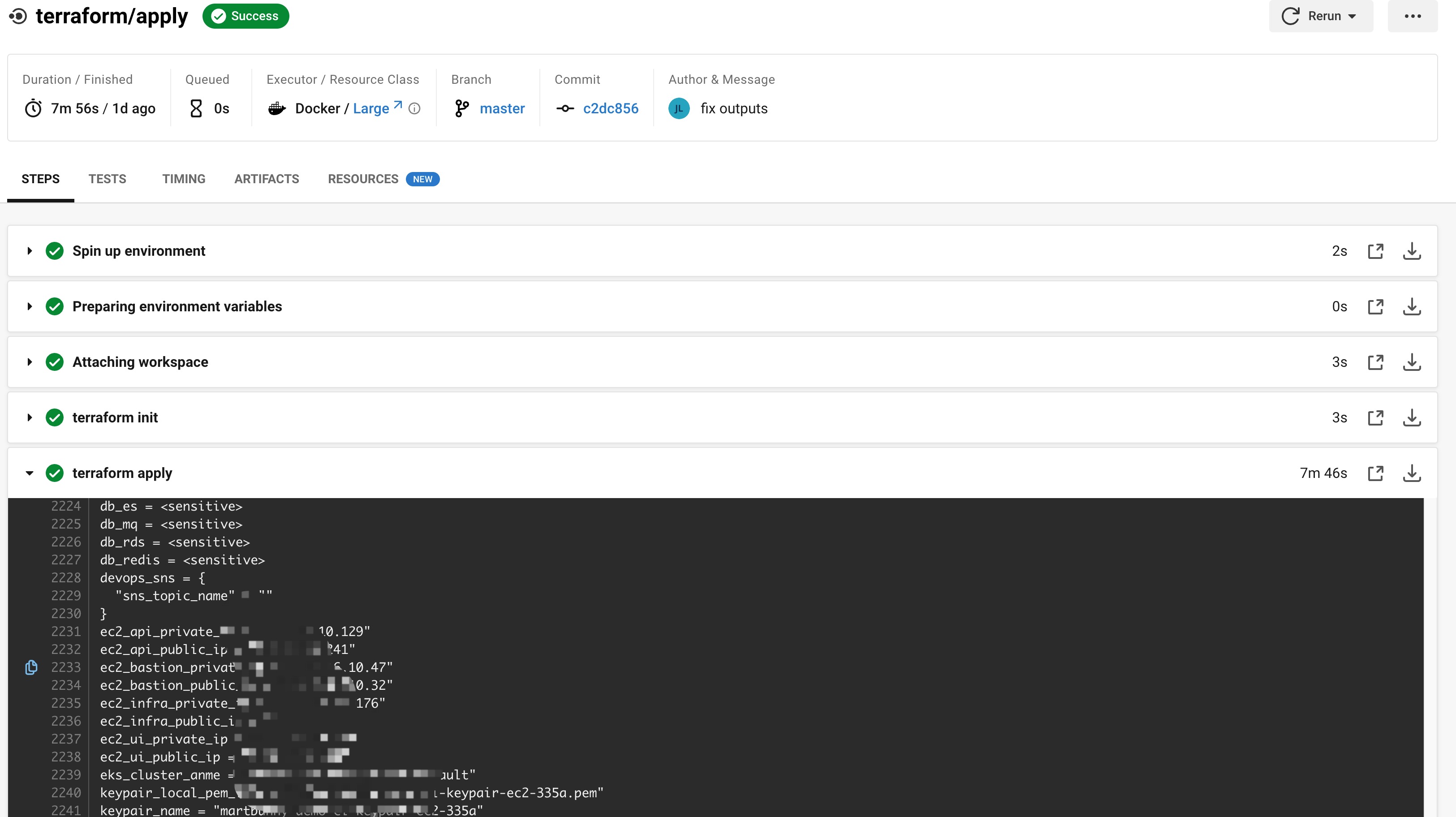

We add a type: approval job so that the workflow runs the final apply after a manual approval is granted.

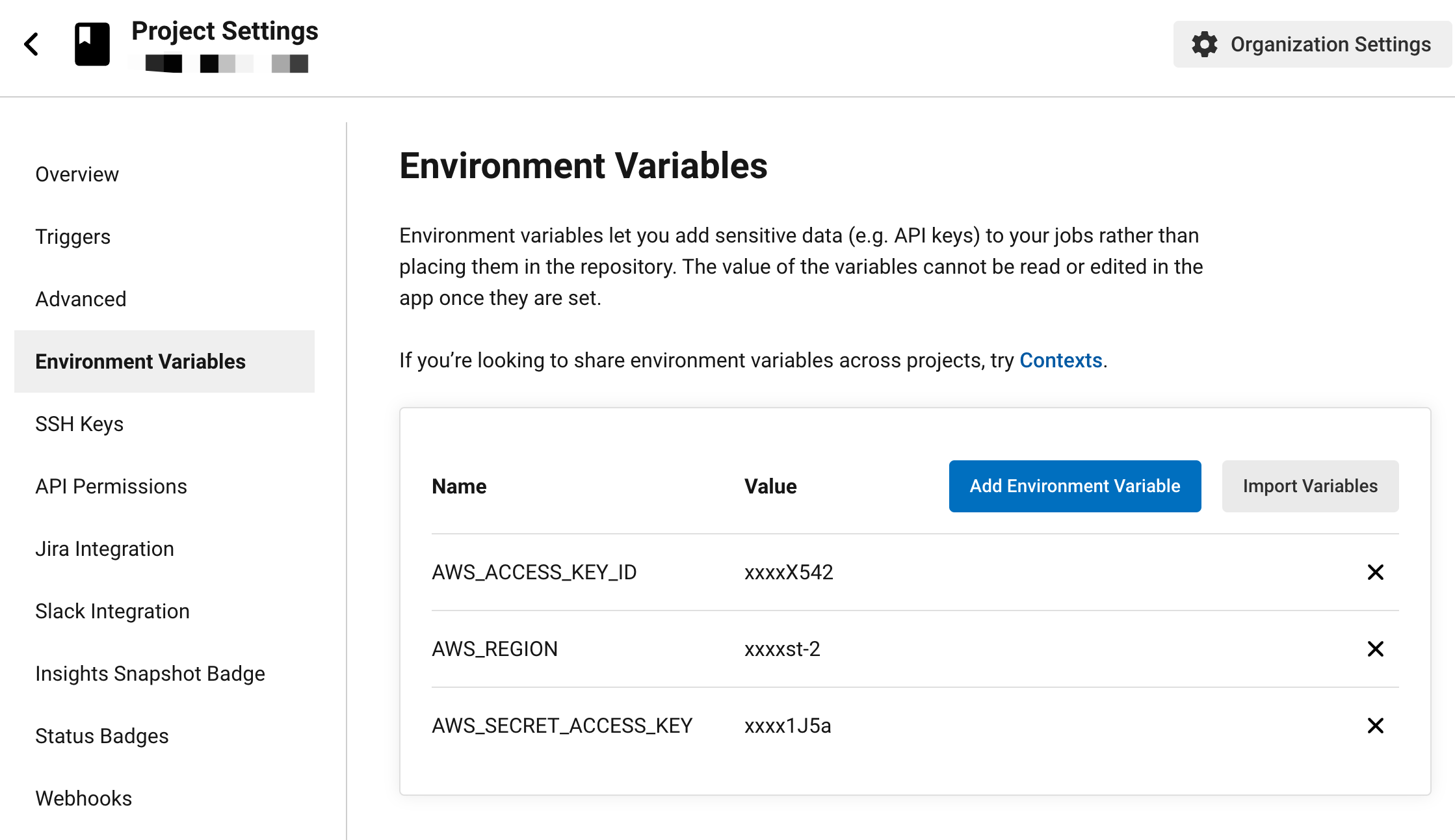

Further more, we have to authorize terraform to access AWS api using our credentials. Terraform honors these environmental variables in its AWS provider.

| |

Hence we can just add these in CircleCi project settings:

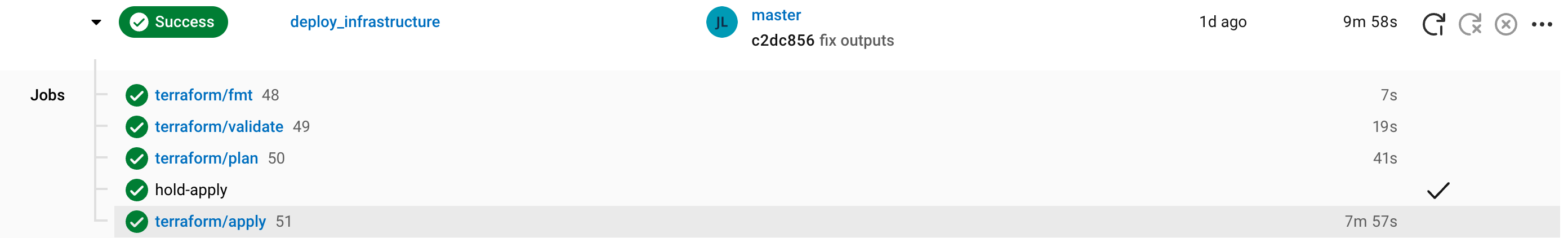

Now we have everything setup. Whenever we push terraform code commits, a pipeline will be triggered to perform state check and operate infrastructures on cloud providers as we defined.

Summary

Thanks to AWS Open API, terraform and CICD service providers, We made it to operate AWS infrastructures automatically by submitting terraform code in git repository. Maybe there are some other ways to do so, Terraform is definitely the road that has been paved with a set of providers and modules, which makes you feel that GitOps seems not so far away.